In my previous post, I talked about what LLMs actually are: sophisticated pattern-matching engines, not thinking machines. I explained how our evolutionary wiring makes us anthropomorphize them, and how they’re designed to exploit our brain’s dopamine system.

If that made you uncomfortable, good. It should.

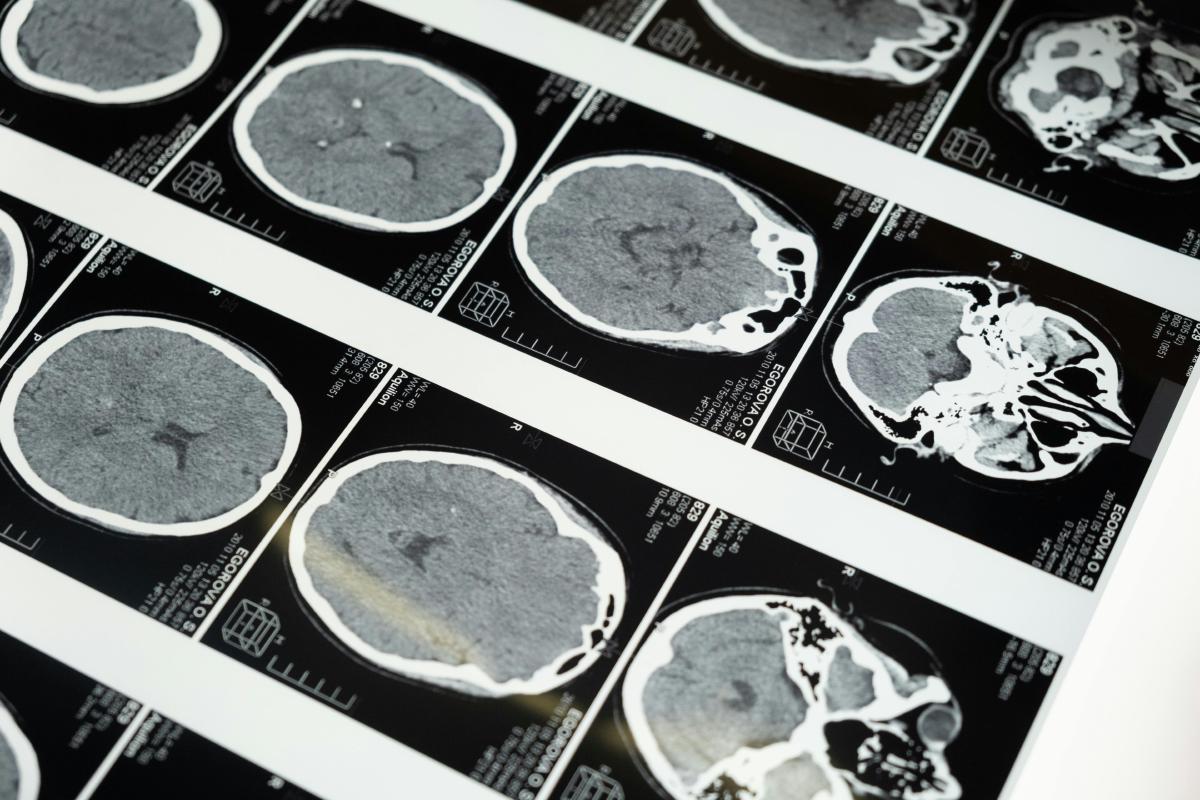

But here’s where it gets worse. We’re not just talking about theoretical risks or philosophical concerns about what it means to “think.” We now have hard data showing what happens to human cognition when we use these tools extensively.

And the results are genuinely alarming.

The Cognitive Decline Is Real #

I wish this was just theoretical concern-trolling. It’s not.

A recent study from Microsoft Research analyzed 319 knowledge workers using generative AI tools. [1] The findings are stark: when workers had higher confidence in AI’s ability to do a task, they engaged in less critical thinking. Not because they were lazy, but because the tools are so good at producing plausible output that questioning it feels unnecessary.

The researchers found that AI tools reduce perceived effort for almost every type of cognitive activity. Need to recall information? AI does it faster. Need to analyze a problem? AI breaks it down. Need to synthesize ideas? AI combines them.

Sounds great, right? Maximum efficiency!

Except here’s what else they found: workers weren’t doing less work. They were doing different work. Instead of thinking through problems, they were managing AI outputs. Instead of learning and growing their expertise, they were becoming AI babysitters: editing, reformatting, and validating text they didn’t create and often don’t fully understand.

But wait, it gets worse.

Another study from MIT used EEG to measure brain activity in people writing essays. [2] They compared three groups: people writing without any tools (brain-only), people using search engines, and people using LLMs.

The results are genuinely concerning.

LLM users showed the weakest brain connectivity patterns. Their neural engagement was significantly lower than that of even search engine users. Over four months, LLM users consistently underperformed at neural, linguistic, and behavioral levels.

Then the researchers did something clever. They had the LLM users try to write without the LLM. These participants struggled. Their alpha and beta brain connectivity (indicators of cognitive engagement) were suppressed. They had essentially accumulated what the researchers called “cognitive debt.”

Think about that. Four months of LLM use, and participants had measurably less brain activity when writing. Their cognitive muscles had atrophied.

To make it even more troubling: LLM users couldn’t accurately quote their own work. They had the lowest sense of ownership over their essays. They’d offloaded not just the work, but the thinking itself.

This isn’t “learning to use new tools.” This is cognitive decline.

We’ve Been Here Before (Sort Of) #

This isn’t the first time technology has changed our brains. We’ve been here before. Sort of.

When calculators became ubiquitous, we stopped doing mental math. Studies show that children who rely heavily on calculators develop weaker number sense and struggle with estimation. But we accepted that trade-off. Mental arithmetic, while useful, isn’t fundamental to higher-order mathematical thinking.

When GPS became standard in our phones, we stopped building mental maps of our cities. And this one? The effects are measurable and concerning.

Research on London taxi drivers showed that intensive spatial navigation training actually enlarged their posterior hippocampus, the brain region responsible for spatial memory. [3] The hippocampus physically grew to accommodate their detailed mental maps of London’s complex street layout. Years of navigation experience correlated directly with increased hippocampal volume.

But here’s the flip side: when we started using GPS for everything, that cognitive work disappeared. A 2020 study found that people with greater lifetime GPS experience have significantly worse spatial memory when required to navigate without GPS. [4] Even more troubling: participants who increased their GPS use over a three-year period showed steeper declines in hippocampal-dependent spatial memory.

We outsourced spatial thinking to devices. And our brains responded by atrophying the structures we weren’t using anymore.

But here’s the critical difference: spatial navigation is one cognitive skill. LLMs are coming for all of them. Memory formation, creative problem-solving, analytical thinking, writing, coding, reasoning. The scope is vastly larger, and the stakes are exponentially higher.

When GPS replaced our mental maps, we lost the ability to navigate without it. When LLMs replace our thinking, what exactly are we losing the ability to do?

Warning Signs You’re In Too Deep #

How do you know if you’ve crossed the line from using AI as a tool to becoming dependent on it? Here are the warning signs:

You struggle to start tasks without opening ChatGPT first. If your first instinct when faced with any problem is to ask an LLM, you’ve stopped trusting your own ability to think through issues. The AI has become a crutch, not a tool.

You can’t remember what you “wrote” yesterday. When someone asks you about content you supposedly created, do you draw a blank? If you can’t recall the key arguments or structure of your own work, you didn’t really write it. You managed its production.

You feel anxious when the AI is unavailable. If ChatGPT being down or hitting rate limits causes genuine distress, you’re not just using a convenient tool. You’re dependent on it.

You’ve stopped questioning outputs that “sound right.” The fluency of LLM output is seductive. If you’re no longer checking facts, testing code, or verifying logic because the text seems plausible, your critical thinking has been disabled.

You’re defensive when someone questions your AI-generated work. If criticism feels like a personal attack even though you didn’t actually create the content, you’ve started identifying with the AI’s output rather than your own expertise.

You can’t explain how things work anymore. Can you walk someone through the logic of the code you “wrote”? Can you defend the arguments in the document you “drafted”? If you’re just a curator of AI output, you’re losing your expertise.

You’ve forgotten what it feels like to struggle with a problem. There’s cognitive value in the struggle. In wrestling with a difficult concept, in trying multiple approaches, in getting stuck and unstuck. If you skip straight to the AI answer, you’re missing the learning.

If you recognized yourself in more than a couple of these, it’s time to step back and rebuild your cognitive muscles. The good news? Unlike physical muscles, cognitive abilities can recover with deliberate practice. But you have to actually practice.

The Workplace Implications #

This isn’t just a personal problem. Organizations are facing a looming crisis they don’t yet understand.

The knowledge transfer problem. When senior employees use AI to do work that would traditionally be learned by junior staff, institutional knowledge never gets transferred. The junior employee learns to prompt ChatGPT, not to actually solve problems. Five years from now, when the senior people retire, who will train the AI?

The quality control crisis. If everyone is using AI to generate work, and no one fully understands what they’ve generated, how do you maintain quality standards? You can’t review what you don’t understand. We’re building a house of cards where nobody knows if the foundation is solid.

The innovation trap. When everyone uses the same AI tools, everyone gets similar solutions. LLMs are trained on past patterns. They excel at generating “best practices” that sound plausible. But innovation doesn’t come from best practices. It comes from understanding principles deeply enough to know when to break the rules.

The expertise vacuum. Here’s the terrifying scenario: a generation of workers who never developed deep expertise because AI was always there to shortcut the learning process. They can prompt effectively. They can edit output. But they can’t create from scratch. They can’t solve novel problems. They can’t train the next generation.

And when the AI gets something wrong in their domain, they won’t know it’s wrong.

Organizations need to wake up to this. The short-term productivity gains from AI are real. But the long-term cost, the systematic de-skilling of your entire workforce, could be catastrophic.

Some companies are starting to notice. Reports of junior developers who can’t code without Copilot. Analysts who can’t write reports without ChatGPT. Writers who’ve forgotten how to structure an argument.

The question isn’t whether to use AI. It’s how to use it without destroying the very expertise that makes your organization valuable.

How To Actually Use AI (Without Destroying Your Brain) #

Now; I’m not saying never use LLMs. I use them. They’re useful for specific tasks. But we need to stop pretending they’re thinking partners and start treating them as what they are: sophisticated text generators that are really good at bullshitting.

Here’s my framework:

First: Define the problem yourself. Before you touch ChatGPT or Claude or whatever LLM is hot this week, write down what you’re actually trying to solve. Not what you’ll ask the AI, but what the actual problem is. This forces you to think.

Second: Understand the concept of the solution. If you’re asking an LLM to generate code, you should understand what that code needs to do and roughly how it should work. If you’re asking it to analyze data, you should know what kind of analysis is appropriate. If you’re asking it to write something, you should have a clear sense of the argument or structure you want.

If you can’t do this, you’re not using AI to augment your expertise. You’re using it to replace expertise you don’t have. And that’s a problem, because you won’t be able to evaluate whether the output is actually good.

Third: Treat the output as a draft from a well-meaning but frequently wrong intern. It might be great. It might be completely wrong. It might be subtly wrong in ways that are hard to detect. Your job is to verify, validate, and own the result.

This means:

- Check facts against authoritative sources

- Verify that code actually works (and understand why it works)

- Make sure the logic actually makes sense

- Test edge cases the AI might not have considered

- Rewrite anything that doesn’t match your voice or needs

Fourth: Never outsource learning. If you’re trying to learn something, LLMs are terrible teachers. They can give you information, but information isn’t understanding. They can show you examples, but examples aren’t practice.

Use them for reference. Use them to check your work. But do the actual thinking yourself.

Fifth: Build in friction. The conversational interface is designed to be frictionless. That’s dangerous. Add friction back in. Write down what you’re asking for and why. After you get a response, wait before using it. Force yourself to explain the output to someone else (or to yourself).

The goal isn’t to make AI harder to use. It’s to keep your brain engaged.

The Broader Picture #

Here’s what keeps me up at night: we’re in the early days of this technology. The studies I’ve cited show measurable cognitive decline after just four months of use. Four months.

What happens after four years? After a generation?

We’re conducting a massive, uncontrolled experiment on human cognition. We’re handing cognitive work to systems that don’t think, don’t understand, and can’t tell us when they’re wrong. And we’re doing it at scale, across nearly every knowledge work profession.

I’ve spent almost three decades working with data and technology. I’ve seen tools come and go. I’ve watched technologies that were going to change everything become footnotes. But I’ve never seen anything that has the potential to reshape human cognition the way LLMs do.

Not because they’re so powerful, but because they’re so seductive.

The turbo-charged abacus is an amazing tool. But it’s still an abacus. It counts. It doesn’t think. And if we forget that, if we let it do our thinking for us, we won’t just get wrong answers.

We’ll lose the ability to think at all.

Join the Conversation #

What’s your experience with using AI tools in your work? Have you noticed changes in how you approach problems? I’d love to hear your thoughts - and more importantly, your concerns. This is a conversation we need to have as a community, before the decisions get made for us. Please reach out to me or comment on LinkedIn or BlueSky!

References #

[1] Lee, H-P. et al. (2025). “The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers” Microsoft Research.

https://www.microsoft.com/en-us/research/wp-content/uploads/2025/01/lee_2025_ai_critical_thinking_survey.pdf

[2] Kosmyna, N. et al. (2025). “Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task.” arXiv.

https://arxiv.org/abs/2506.08872

[3] Maguire, E. A., Gadian, D. G., Johnsrude, I. S., Good, C. D., Ashburner, J., Frackowiak, R. S., & Frith, C. D. (2000). “Navigation-related structural change in the hippocampi of taxi drivers.” Proceedings of the National Academy of Sciences, 97(8), 4398-4403.

https://www.pnas.org/doi/10.1073/pnas.070039597

[4] Dahmani, L., & Bohbot, V. D. (2020). “Habitual use of GPS negatively impacts spatial memory during self-guided navigation.” Scientific Reports, 10(1), 6310.

https://www.nature.com/articles/s41598-020-62877-0

Photo by cottonbro studio: https://www.pexels.com/photo/mri-images-of-the-brain-5723883/