Ask, And You Shall Receive: Making An Event Tracker

·1713 words·9 mins

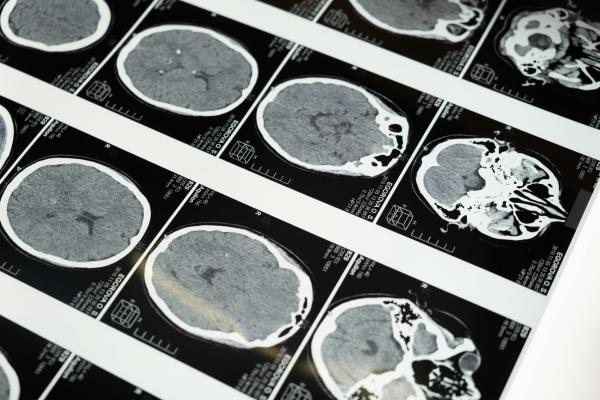

Using Claude Code, I built a full-stack application to replace Microsoft Access - without being a developer or understanding React, TypeScript, or Node.js. LLM-assisted coding enables rapid prototyping and bridges the gap between business requirements and technical implementation, but like flight simulators, it doesn’t make non-developers into developers. The parallel matters: functional prototypes aren’t production-ready systems, and knowing the difference requires actual expertise.